On the fourteenth day of October in the year of our Lord 1984 the second episode of Murder, She Wrote aired. Titled Birds of a Feather, it features one of Jessica’s nieces and takes place in San Francisco. (Last week’s episode was Deadly Lady.)

We open with a man in an ugly track suit jogging on a road next to the sea. A man in a white suit gets out of his small car and starts jogging next to the man in the track suit, saying that they need to talk.

The man in the white suit needs his money, and the man in the track suit says that the man in the white suit will get it when he’s finished. We learn that the man in the white suit is named Howard, and that he won’t get a time unless he is “there” tonight. Howard is unhappy but accepts this answer and the man in the track suit runs off.

When he gets to his car, it turns out that another man in a white suit is waiting for him.

Well, a man in a white jacket, at least. His name is Mike. He calls the guy in the brown track suit Al. Mike thought that they had a deal, and Al says that they do, Mike just needs to be patient. Mike says that he’s been patient for six months and he thinks that Al is just pulling his chain. Al asks if he got the money, and Mike replies that that’s his problem. Al then tells him to be careful. Things have been going real good, but he can live without Mike. Mike pokes Al in the chest for emphasis as he replies that anybody can live without anybody. Mike then leaves.

The dialog is intentionally vague to stir up the audience’s curiosity. If we want to learn what this is all about we won’t change the channel or go to bed early. Ironically, though, it’s actually far more realistic than the exposition one normally finds at the beginning of episodes. A typical show might begin with, “Well, if it isn’t Al Drake, manager of my favorite night club.” “Hi there Mike Dupont. Still hoping to buy out the contract of my lead act?” No one actually talks like that, though through exposure we come to accept it. I find it amusing that the realism is an accidental byproduct.

The scene then cuts to a young woman named Victoria who’s talking to a priest about her upcoming wedding.

It’s going to be a very simple wedding. Intimate. The priest says that they can still make it festive, with flowers on both sides of the alter, but Victoria says that she’s allergic to flowers.

When the priest asks exactly how intimate this wedding will be, she says that she just arrived from NY, her Aunt jut arrived from Maine, and then just Howard and maybe a few of his friends.

Yes. That Howard.

He comes in a minute later and apologizes for being late, saying that traffic was terrible when he came from the office. Victoria tells him about dinner reservations she made and a minor fight ensues as he says that he can’t make it. In the fight we get a little backstory that he’s been busy every night for the last five nights.

The scene then shifts to Victoria and Jessica at the restaurant, where a small joke about the lobsters being Maine lobsters is made before they’re shown to their table. (The lobsters aren’t active; when Jessica asks if he’s sure that they’re Maine lobsters he says that they’re flown in fresh every day. Jessica says that perhaps the lobsters have jet lag.)

It’s an interesting restaurant.

Not very crowded, despite this being, in theory, a dinner engagement. That’s cheaper to film, of course. It’s very fancy in a dimly lit, hard-to-see-the-details kind of way. There were real restaurants like that back in the 1980s and for all I know, still are. It’s cheaper to look fancy if people can’t look to closely at the fancy stuff, both in TV and in real life.

Over dinner, Victoria tells Jessica about her history with Howard—she met him about a year ago in New York City. He was acting in an off-broadway show. He works in insurance (as his latest job—he had been a cab driver in New York), but aspires to be an actor. Then she breaks down and tells Jessica about her worries. She’s been in town five days but they haven’t gone out at night even once. And she went to Howard’s office the day before to surprise him and they told her that Howard hadn’t worked there for a month. Jessica says, knowingly, “Oh,” and takes a drink of wine.

She drinks it as if she wants the alcohol in it. I know I’m skipping ahead a bit, but this is very unusual for Jessica. (She rarely drinks except to comment on how fine the extremely rare wine which requires a refined palate to enjoy is.) I guess they’re still feeling the character out at this point.

Anyway, yesterday, Howard had circles under his eyes and smelled like perfume. And today he lent her a handkerchief and the lipstick on it was not her shade. And matches from a nightclub were all over his apartment. She’s considered going to the nightclub, but if she loves Howard, how can she justify spying on him?

Jessica replies, “For your own peace of mind, I think you have to.” Her tone suggests that this is sage advice, but it really isn’t. She could have said, “You can do it for Howard’s sake. If there’s something he’s afraid to tell you about, you can have the courage for him.” Or, “For the sake of the children you may have with Howard, you owe it to them to make sure you can both go through with the marriage.” Or “marriage shouldn’t be entered into with secrets and if he’s not strong enough to tell you his secrets, you should do it for him in case it’s something you can accept.” All of these actually address Victoria’s concern. Jessica’s reply that Victoria just needs to be more selfish is… bad advice.

The scene cuts to the night club, which is a relatively classy place.

Before long the camera goes to Al, who is filling in for the host, and a well-dressed man named Patterson walks in.

It turns out that he’s the agent for Freddy, a comedian with a four-year contract at the club. Al’s interpretation of their contract is that Freddy can’t do anything else, while Patterson’s interpretation is that Freddy is free to do other stuff on the side. Patterson recently got Freddy on a talk show and now he’s hot. Al, however, is unmoved, except in the sense that he says “this is what we have courts for” and walks off.

Jessica and Victoria come in. They ask for a table for two but the host says that he can seat them next Thursday. Victoria then identifies Al as being in charge from some posters on the wall and walks up to him, explains that she and her Aunt want a table, and then explains how famous Jessica is. Al sees to it that they’re seated immediately.

I’d like to pause to take note of what she actually says. Assuming that she’s telling the truth—and I suspect that she is—Jessica has six best-seller books, was on a talk show this morning, and will meet the mayor the next day. Since the pilot episode depicts Jessica’s first book being published, obviously a lot of time has passed between the pilot and the main series.

The first act we see is Freddy York, the performer whose agent showed up and talked with Al a few minutes ago.

His shtick is that he plays the drums as his own backup and does the rim-shots for his own jokes. His outfit is really amazing; I believe it’s intended to be sincere. The episode was shot in 1984, which was only four years after the 1970s when collars like this were hot stuff. I suspect it’s meant to indicate that he’s a little stuck in the past, but not very much. His jokes, incidentally, aren’t terrible, though they are neither very witty nor very classy. After a few of them, we cut to a glamorous older woman walking in.

It turns out that this is Al’s wife. Since Al’s last name is Drake, she’s Mrs. Drake. The host greets her very politely, but there’s a bit of ice in the air. When he asks if Al is expecting her, she replies she very much doubts it. She’s shown to her table immediately, of course. Once she’s on her way to her table, the host grabs a bus boy and tells him to go find Al and tell him that his wife is here.

As the busboy is looking for Al back stage, he runs into a woman who asks him what he’s doing back stage.

We actually saw her before and it seemed so minor an interaction I didn’t think it worth mentioning. She had some banter with Al before Freddy’s agent came in. Since she may play a bigger role than I anticipated: her name is Barbara. Anyway, she tells the busboy to go back to the front and she’ll tell Al.

The moderately funny comedian who does his own rim shots tells a final joke—which Mrs. Drake applauds vigorously—then he profusely tells the crowd that they’re beautiful, wonderful, and every good thing, then takes his leave. Jessica then asks Victoria if she’s noticed that there’s something a little off about this club. Victoria doesn’t know what Jessica means. Frankly, neither do I.

Somebody in a silver dinner jacket then introduced the “chanteuse” they’ve all been waiting for.

After a few introductory bars and the length of time it takes to sing “There’s a somebody I’m longin’ to see. I hope that he turns out—” we hear a scream. Then a female figure in a fancy dress runs off the stage and through the crowd, towards the front door. Right behind it, Barbara runs onto the stage and calls out, “Stop him! He’s a murderer!”

A police officer shows up at the front door cutting off that exit, so the figure then tries several other avenues of escape before crashing into Jessica and Victoria’s table. His wig falls off and we get to see who it is.

It’s a surprise, though it shouldn’t be. This is exactly the kind of twist that TV shows of the 1980s loved, all the more, right before a commercial break. Which is what happens after some shocked recognition between Howard and Victoria and Jessica being surprised that this is Howard.

We come in from commercial to an establishing shot of a police car driving with its sirens on, followed by an interior of the club in confusion. Amidst the confusion we do learn that Al was shot.

Howard is being kept locked in a room with a security guard keeping watch on the door. Victoria comes up and persuades the guard to let her in. She’s so happy that it turns out the thing he was hiding was just a job that most of what they do is kiss until Lt. Novak shows up and is surprised to see them passionately embracing. He takes it in stride, however, and merely asks the security guard which one is the suspect (“the tall one”) then directs that he be taken down to the station and booked.

The scene then shifts to the scene of the murder, with Lt. Novak entering and taking charge.

His manner is very matter-of-fact. Interviewing the assembled crowd of people, he asks who saw the murder and Barbara answers that she did, or, rather, she walked past the open door and saw Howard standing over Al holding the gun. The Lt. looks at the ground and sees a gun. Picking it up with a pencil he remarks that, having a smooth grip, they may get some fingerprints from it.

This musing is interrupted by the sound of a bird—a white cockatoo—cawing and then Jessica interrupts to ask if Lt. Novak noticed a small white feather on Al’s jacket. Instead of answering, the Lt. asks her to leave. The manner is curious; he asks if she’ll do him a big favor and she eagerly replies that she’ll do anything at all to help. He then asks her to get out of here and she is crestfallen. Apparently, by now, Jessica is used to joining the police on murder investigations.

The scene changes to the next day, at the police station, in Lt. Novak’s office, with Lt. Novak finishing interviewing Freddy York (in the same clothes as he was wearing on the night before). After signing his statement, Freddy express his lack of sympathy and leaves. Right after, Jessica knocks on the door and enters. Novak doesn’t want to talk with her but she uses her clout and fame to bully him into cooperating.

He relents and gives her a brief infodump. The suspect was seen standing over the body holding the gun. The only fingerprints on the gun belong to the suspect. It was common knowledge that he’d been arguing with Al Drake about money. The gun was stolen from a pawn shop about six months ago, in New York city, where Howard lived at the time.

She asks if he conducted a nitric acid test to determine whether Howard fired the gun. He replies that they haven’t gotten to it yet, and she tells him that he’d better get to it soon because after a few hours the test is meaningless. (According to Wikipedia, this is accurate. Gunshot residue tends to only last on living hands for 4-6 hours since it is easily wiped off by incidental contact with objects.) Since the murder took place the previous night and it is now past sunrise, the crucial window has already expired, so it’s a bit weird that Jessica is telling the Lt. to get to the gunshot residue test soon. (A nitric acid solution is used to swab the area to be tested as the first step, which is, I believe, why she’s referring to it as a nitric acid test.)

She then demands to see Howard and doesn’t take ‘no’ for an answer.

Howard is brought to the Lt.’s office, who gives them privacy for some reason. Howard is confused since he’s never met Jessica before, but she takes charge. Jessica directly asks him if he killed Al Drake and he says he did not, Al was dead when he walked into the room. He had just finished his act and went into Al’s office to get his money and quit. This is a bit odd because we saw the act right before Al was found dead and it was Freddy’s comedy routine. (I suspect that this is just a plot hole and not a hole in his story.) We get a flashback which seems plausible enough with Howard having a one-sided conversation with Al for a bit, since Al was facing the wall, and he only realized that Al was dead when he turned Al’s chair around to make him talk to him. Since this may be important later (someone may have thought Al was alive when he was actually dead), let’s look at how the chair was when Howard entered the room:

You can’t see anything that indicates that Al is dead, but on the other hand this is a very weird thing for a living man to do. If you came into a room and saw a man sitting in a chair motionless staring at a dark wall, I think you’d be a lot more likely to check on him than to just assume he’s lost in thought. That said, there’s a good chance that this indicates a significantly earlier time of death.

Anyway, after finally turning the chair around when he got tired of Mr. Blake “ignoring” him, he staggers around in shock for a bit, notices it’s incriminating that he’s holding the gun that probably shot Mr. Blake, then Barbara comes in the door, sees the scene, and screams, at which point Howard panics and bolts.

Jessica says that she’s quite relieved because there’s only been one killer in the family, in 1777, and the red coat shot first. She then pivots to wondering what Barbara was doing in the office and Howard bowdlerizes to “Everyone knew that she and Mr. Drake worked late. A lot. Together.”

Jessica knowingly says, “I get the idea.”

She then says that she’s got the name of a very good lawyer and asks if there’s anything else he needs, to which he sheepishly replies, “pants.”

The scene then shifts to Jessica on the phone with Lt. Novak, presumably some time later. He lets her know that they’ve narrowed the time of the murder down to between 9:50 and 10:05. Jessica asks if that isn’t a bit precise for a medical examiner and he replies that it didn’t come from the medical examiner, it’s when York was performing and the banging of his drums covered the sound of the shot.

There’s an interesting exchange which follows the end of their conversation. Lt. Novak asks his assistant, “What is it about that woman that makes me nervous?” The assistant replies, “I think she’s kind of cute.”

I find it interesting because it’s explicitly framing Jessica’s investigations. The police are officially not thrilled with Jessica investigating, but we—the audience—know that this is a mistake on their part. The assistant thus provides some ambiguity here. It certainly makes more sense than Amos Tupper taking both roles, as he did in Deadly Lady.

The scene changes to Jessica at the club during the day. She runs into Freddy’s agent for some reason. He asks if she has an agent on the west coast, but she does. He directs her to where she can find Barbara (she asked), and then takes a moment to look suspicious for the camera.

I think that the equivalent of this, in a novel, is to give us a glimpse into the characters thoughts which is highly misleading if taken out of context, which is how we get it. “‘I hope she doesn’t find out,’ he thought.” Then later we discover it was a different ‘she’ and the thing to not find out was something completely unrelated—if the book is halfway decently constructed, a red herring that the detective uncovers and this explains “why you were acting so funny when I spoke about [name].” It’s a bit of a cheap trick, but it does make the viewer/reader feel like they need to keep on their toes, which they want to feel like.

We then see Mrs. Blake talking to two men—the host and someone I don’t recognize. The upshot is that she’s intending to run things now that Al is gone. She also picks a fight with their leading female impersonator, who storms off to his dressing room. She yells at him to never turn his back on her and follows. Once they’re in his dressing room and close the door their manner changes entirely, they embrace, and passionately kiss. And on that bombshell, we go to commercial break.

When we get back, Jessica walks into Freddy’s dressing room by mistake, where he’s sitting at his mirror for some reason. He says that it’s too bad about Howard, the kid’s got talent and not just at wearing dresses. He makes some jokes about how his own talent is wasted in a dump like this; in Las Vegas a llama who’s part of an act has a better dressing room that he does. Jessica says that it’s not so bad and at least he’s got a window with a great view. He jokes that it’s his manager, Patterson: he couldn’t get Freddy any more money so he got him a window. When he asks if Jessica wanted to see him about something she excuses herself for intruding and leaves.

When Jessica finally finds Barbara, Mrs. Drake is firing her.

Jessica catches her carrying a box full of her stuff out of the office and offers to give her a lift in the taxi she’s in. Barbara accepts.

Jessica reads Barbara as a gossipy sort of woman and so plays a gossip herself. She shares the news that Al was already dead when Howard got there and Barbara accepts it without question. She goes on to say that she wouldn’t be surprised if Mrs. Drake did it. She also is aware of the affair she’s having with the female impersonator (his name is Mike). He was actually trying to buy the club. She also could believe that Mr. Patterson killed him because Freddy was under a seven year contract.

She gets out at her apartment and the scene shifts to Mike waiting near the ocean for Mrs. Drake. I’ve just realized that Mike was the second guy who talked to Al at the very beginning of the episode. Asking Mike if he raised the money was probably a reference to buying the club.

Anyway, he complains that Mrs. Drake kept him waiting and asks if this is a sign of things to come. She’s apologetic and gets to the point: she wants to know if he killed Al (which, she professes, wouldn’t make any difference to her if he did). Funnily enough, he had the same question for her, and it also wouldn’t make any difference to him if she did. After some closeups in which the actors try to look as suspicious as humanly possible, the scene ends.

This sort of scene will become a staple of Murder, She Wrote episodes, especially towards the middle. Once you notice them it becomes way easier to figure out who the murderer is: whoever doesn’t get a closeup of them looking suspicious.

In the next scene Jessica catches up with Lt. Novak at the club. She inquires about the nitric acid test and it came back negative. The Lt. says that Howard could have been wearing gloves when he shot Al and Jessica points out that if he was, there wouldn’t have been finger prints all over the gun and he can’t have it both ways.

Jessica then questions Lt. Novak’s theory about the gunshot being masked by Freddy’s drum act, so they do some experimentation with the assistant firing a gun in the murder room and Jessica and Lt. Novak in front of the stage with various amounts of noise being produced, and no matter how much noise, they still hear the shot. Lt. Novak takes that to mean that the only possible explanation is it being covered by the sound of Freddy’s drums. Why they tested every other source of noise except for Freddy’s drums isn’t explained.

Anyway, Freddy comes out and demands to know what’s going on—is Mrs. Fletcher suggesting that one of them killed Al? At that moment a string of heavy stage lights falls down almost killing Jessica and Freddy.

Freddy dives in front of the lights, Jessica steps back to avoid them. When the camera finds Freddy he’s on the ground holding his neck in great pain, probably from the dive and landing on the ground.

The scene then shifts to Jessica knocking on the door of Lt. Novak’s apartment the next morning. She woke him up but is only very slightly concerned at this given that Lt. Novak has been working all night again. She needs to talk to him about Howard.

He’s friendlier than normal, explaining that his hates-everyone approach is just his office persona. They go over the list of possible suspects, but for some reason he’s convinced that Howard is guilty. I don’t really get this because it’s at odds with his theory that the drums covered the sound of the gun—unless he’s willing to postulate that, after shooting Al, Howard just stood around holding the gun for up to a quarter of an hour.

Anyway, after Jessica goes over some facts which incriminate other suspects including the affair between Mrs. Drake and Mike—which Lt. Novak didn’t know—he tells her that she’d have made a great cop but asks her to leave the policing to the police. She responds that she wouldn’t dream of interfering, which is odd because she’s very clearly happy to interfere, for example demanding that Lt. Novak do a nitric acid test and demanding that he take time out of the investigation to talk to her or she’ll badmouth him on television.

Anyway, he clarifies that her interfering isn’t what he’s worried about. Lab results indicate that the lights falling wasn’t an accident. The rope was eaten through with acid. Jessica’s interpretation was that someone was trying to kill Freddy York. Lt. Novak’s interpretation was that she was the target. We get a wide-eyed reaction shot from Jessica then switch scenes to a courtroom where Howard is bailed out. Jessica apparently posted bail for him, since she tells him that if he jumps bail the state of California has an option on her next four books.

In the hallway as they are leaving, Jessica asks Howard if he saw Mrs. Drake backstage during Freddy’s performance and he’s sure that he didn’t, but he did see her come in the stage door just before he went on. This isn’t very helpful to us because we never saw him on stage and there wasn’t really a time for him to have been on stage, but it helps Jessica because she isn’t deterred by plot holes in the same way that the audience is.

Accordingly, she goes and visits Mrs. Drake, who is playing golf. In between insincere condolences Jessica asks if Mrs. Drake saw her husband shortly before he died and she said that she didn’t, she came in during Freddy’s set. Jessica replies that it’s strange, then, that someone said they saw her come in before Freddy’s set. Mrs. Drake takes offense at this and says that she didn’t kill her husband, and if Jessica insists on sticking her nose where it doesn’t belong, she should look into Freddy York. His contract was a personal services contract with Al, not with the club, and when she brought Freddy flowers in the hospital, Freddy gave her notice that he was quitting.

I do need to partially take back what I said about us never seeing Howard on stage. Just in case I missed something I went back and it looks like Howard actually was on stage a little before Jessica and Victoria came in. If you look closely during the opening shot at the club, you can see Howard on the stage:

You never see him clearly and almost immediately the camera pulls back and focuses on other things. And we’re looking at this in DVD quality. In broadcast quality back in 1984, it would have been extremely hard to make that out as Howard. Anyway, this introduces a timing problem. Howard confronted Al Drake after Freddy York’s set was over, but according to Howard, also, “I finished my act, then I went back to his office to quit and get my money.” In the flashback he was wearing his stage costume. This means he spent the entire length of Freddy’s performance doing nothing before he went to confront Al.

Anyway, Jessica takes Mrs. Drake’s story about visiting Freddy in the hospital to mean that Freddy is well enough to receive visitors and decides to pay a visit to Freddy herself. Accordingly, the scene shifts to the hospital, where Freddy and his agent are drinking champagne and celebrating all of the great things they’re going to do now that Freddy is free. Jessica walks in and Bill Paterson (the agent) basically yells at her to stop investigating, since neither he nor Freddy killed Al, and Freddy was not only on stage when it happened, someone later tried to kill him with the lights. Jessica replies that that’s a bit of a puzzler, since Mike thinks the lights were an attempt to kill him, Lt. Novak thinks that they were an attempt to kill Jessica, and Bill thinks that they were an attempt to kill Freddy. And on that… bang snap… we go to commercial break.

When we get back, Jessica is walking to her hotel room while Howard and Vicki argue over whether they should postpone the wedding (Howard says yes until he’s cleared, Vicki says no, they should get married right away). They ask Jessica what she thinks and what she thinks is that she needs a nap. Vicki asks if the builders working away in the room next to Jessica’s won’t keep her awake.

Jessica replies that right now she could sleep through Armageddon. She then tells them that she promises that they will get to the bottom of this. She’s sure she’s overlooking something, and it will come to her if she get some sleep.

Jessica goes and lies down, but contrary to her imagined ability to sleep through Armageddon, all of the power tools do keep her up. She then holds the pillow over her ears…

…and comes to a realization of what she had been overlooking.

She then shows up in Lt. Novak’s apartment. What she had forgot was the small white feather on Al Drake. Drake wasn’t shot during Freddy’s performance because the killer used a silencer! When Lt. Novak objects that they don’t make a silencer for that kind of gun, Jessica says that it wasn’t a metal silencer. It was a pillow. That explains the small white feather, which didn’t come from the cockatoo in Drake’s office. (It was an office pet.)

They then go to the scene of the murder and Lt. Novak picks up the pillow in the office and it has no bullet hole. But, Jessica points out, the pillow wasn’t there on the night of the murder. Don’t take her word for it, look at these police photos. When asked how she got police photos, Jessica says that his assistant, Charlie, gave them to her. He really is a very nice man.

Anyway, this shows that the pillow that’s there now was placed there after the investigation, presumably because the one that was there had to be removed because it was damaged when it was used to muffle the sound of the shot.

Jessica then asks Lt. Novak to take part in an experiment. They go to the stage and she has Lt. Novak stand in a precise location on the stage, then goes backstage and drops some sandbags on him. Or would have, had Lt. Novak not stepped out of the way when he heard the sandbags descending. She points out that he heard it, and he replies that of course he did, he’s not deaf. Jessica replies, “and neither was Freddy York.”

At this, Freddy steps out from back stage, applauding. He tells Jessica that she’s quite a performer. She says that it was quite a performance that he put on, diving off the stage when he didn’t have to.

Freddy counters that all she’s proved is that he could have staged the falling lights.

I’m not sure how she’s supposed to have proved that. All she proved—to the degree that she proved anything—was that Freddy was able to get out of the way of the lights because he would have heard them. But that was never at issue. He did get out of the way of the lights, so he got out of the way somehow, and hearing them just as Lt. Novak did is as good a way as anything else. Weirdly, though, it required no proof that he could have staged the lights because the rope was eaten through with acid, which he could have put on the rope before coming out on stage, because anybody could have put the acid on the rope before Freddy came out on stage.

Anyway, he goes on to say that this doesn’t prove that he had anything to do with what happened to Al Drake and while Freddy would love to stick around, he’s got to fly to Vegas—he hopes his arms don’t get tired. He then tells them that they’re beautiful and leaves.

Jessica motions to Lt. Novak to follow, and they do.

In Freddy’s dressing room Jessica points out that the pillow which was used to replace Al’s pillow was from Freddy’s dressing room because it is sun-faded, just like his settee, and it’s the only one that is because Freddy’s is the only dressing room in the building with a window. (The pillow does have a lighter side, though until Jessica said that it was sun-faded I thought it was just two-toned.)

Somehow it being the pillow from Freddy’s dressing room which was used to replace Al Drake’s pillow in the days following the murder means, conclusively, that Freddy is the murderer. Luckily for Jessica Freddy can’t see any way out of this logic and admits it. “It’s my luck. It’s my dumb luck. Half the people in this club wanted Drake dead, and your niece’s boyfriend’s gotta get tagged for it. I knew you were trouble as soon as I saw you. What was I gonna do? Spend the rest of my life working in this rinky-dink club? You ever try to tell jokes when someone’s got their hand on your throat?”

Jessica shakes her head and says, “Surely, murder isn’t the answer.”

This prompts Freddy into a monologue.

You call it murder. I call it a career move. Look at me. What do you see? I’m not just another comedian. I’m Freddy York. I’m the first guy who did his own rim shots. I’m like the Edison of Comedy. I’m Robert Fulton on the drums. So Al Drake sees me one Sunday night. He says, “Kid, you’re good. Here’s a long-term contract. It’s your shot. Your big break.” He broke my spirit. That man broke my heart. I couldn’t let him do that. I’m a creative genius. Fair is fair. He gave me a shot. I gave him a shot. Ba dum bum. Should’ve shoved you under that stage light.

When Lt. Novak asks him why he rigged the lights, he merely replies that Novak should ask Jessica. She says the obvious, that he thought the charges against Howard would get dropped and a murder attempt on him would point suspicion elsewhere. Freddy then says, “Boy, you are good. I mean, you are really, really good. You ever think of taking your act on the road? You should play Vegas. That reminds me, I better cancel my tickets. Doesn’t look like I’m going. It’s too bad. I could’ve knocked ’em dead.”

Jessica nods and says, gently, “I’m sure you would have.”

We then cut to the wedding ceremony for Howard and Victoria. There are a few curious things about it; one is that we come in on “by the power vested in me by the state of California, I now pronounce you man and wife.” But this is in a church and it’s a priest who’s performing the ceremony. Those are the words spoken by a justice of the peace at a state ceremony. It’s interesting that here in 1984 they’re so hard-core secular.

The other interesting thing is the guest list:

It’s just the people from the episode, none of whom had a connection to Howard or Victoria. A cynical man might think that this was mostly done just to save money on casting.

I might be that man.

Anyway, after the vows are over and the guests congratulate the couple, Bill Patterson comes up the isle and tells Howard that he’s been on the phone for an hour and got him a job on a soap opera for two days a week. It starts on Monday.

Victoria is all for it but Howard is ambivalent because it means canceling the honeymoon in Hawaii which Jessica had given them as a wedding present. Howard asks Jessica what they should do and she replies that she usually doesn’t give advice (which causes Lt. Novak to shake his head in disbelief behind her), but she thinks they should go for it.

Then everyone cheers and we go to credits.

This was a very interesting episode. Quite different from Deadly Lady. It was far less of a classic mystery and perhaps a bit closer to a typical Murder, She Wrote episode. Jessica is nosy more than clever, most of the investigation was of red herrings, and Jessica solves it at the end in a moment of inspiration which gives the audience time to figure it out first.

It also had some really big plot holes. Bigger than I’m used to seeing on Murder, She Wrote.

Right at the very beginning, the intended wedding between Victoria and Howard makes no sense. Somehow Victoria and Howard are getting married in a day or two and she’s discussing basic initial planning with the priest. He’s literally never met the groom and doesn’t care; his only concern is interior decoration and some brief rehearsal. Very brief, in fact, because he has to get to chorus rehearsal in five minutes. There is literally only one person from her side of the family coming, and that’s Jessica. No parents, siblings, cousins, aunts, uncles, or friends—just Jessica. Howard also has no family, though for all she knows he could have a few friends he’s made in the last six months. None of this bothers Victoria because she’s head-over-heels in love with Howard and would do anything, absolutely anything, for him. Howard’s lying to her about his job and having various indications that he’s having an affair with another woman only very slightly ruffles her, though it in no way deters her from going through with the wedding.

And somehow, this doesn’t bother Jessica in the slightest.

The timing of Howard’s performance is basically irreconcilable with the presented facts. He seems to have waited around, in the dress he didn’t like wearing, for the entire length of Freddy York’s performance doing absolutely nothing before he angrily went into Al Drake’s office to demand his money and quit.

Howard’s certainty at seeing Mrs. Drake come in right before his performance also goes nowhere, which is probably because it couldn’t have gone anywhere. What could Mrs. Drake have done, back stage, for however long it took Howard to perform, before leaving and re-entering through the front door? If we’re to believe that Howard was right, then presumably it was to visit Mike. Which seems more than a little far-fetched. She could hardly have hoped to be unobserved during such a busy time. And since this is a night club, they had plenty of time for hanky panky during the day.

Speaking of timing, there’s kind of a plot hole with how they filmed the episode. Al is alive and well in front of the club when he directs the host to give Victoria and Jessica a table. They walk directly to their table and Freddy York is introduced and starts his act within ten seconds of them sitting down (in a continuous shot). Al would have had to have sprinted to his office in time for Freddy to shoot him, and then Freddy would have had to sprint on stage, and I doubt that even that would have worked. Timing it, it’s twenty four seconds from when we last saw Al alive to when the curtain parted as the announcer came out to introduce Freddy and we catch a glimpse of Freddy behind the curtain. (The announcer would have seen if Freddy was absent and wouldn’t have announced him if he didn’t see him before stepping out through the curtain, but seeing Freddy is even more certain.) Granted, there were two cuts, but they were very clearly meant to cover continuous time. Twenty four seconds is not much time to sprint to his office in order to get murdered in his chair. (There was even less time for Freddy to have murdered Al after his set, though it’s clearly established that’s not what he did.) So there’s no way that Freddy could have done it. Which is great. I don’t think that there can be a bigger plot hole than “the murderer couldn’t have done it.”

Oh well.

Moving on, what was the whole thing about Barbara telling Mr. Blake that his wife is at the club, then not doing that? I suppose she’s meant to and this is the reason why she went to his office, but it was over a minute of screen time between when that happened and when we hear the scream. Also—and I had to go back and double check to remember this—she isn’t in a hurry to go find Al. In fact, she watches the bus boy leave then peers to make sure that he’s gone.

I can think of no reason whatever that she could want to get rid of the bus boy. Yet she was more concerned with that than with telling Al his wife was here. What was that about? I doubt that the writers knew, either. And the whole thing where Jessica wanted to find out why Barbara was at Al’s office and so saw Howard with the gun? Completely dropped. Jessica never found out that Barbara was carrying a message for the bus boy.

When Jessica meets Lt. Novak, her manner suggests that she expects him to know who she is and to want her help. It’s meant to set up her being disappointed when Lt. Novak tells her to get lost, but it feels weird. She’s old enough to know that it requires some introduction to put yourself into someone else’s business, and that the police don’t do murder investigations for fun.

It’s not a plot hole, just a bit of sloppy writing, but nothing ever comes of Al’s corpse having been facing the wall. He couldn’t have been shot in that position. Freddy had to have turned him around after shooting him. The only reason to have turned him around was to make it look like he was alive when he was already dead. Yet absolutely nothing comes of it. (This is the sort of thing I mean when I say that Jessica isn’t as clever as in Deadly Lady. An observation like that would have been an obvious point in her favor with Lt. Novak.)

Then there’s the “scientific testing” of the hypothesis that the sound of the gun shot was masked by Freddy’s drum playing, which didn’t involve testing that hypothesis. I suppose that they were testing the related hypothesis that other things could have covered the sound, but why did they never test whether Freddy’s playing would have covered it? Especially for the people back stage, where the gun would have been closer to them than Freddy’s drums? Guns are very, very loud. Far louder than drums.

I guess it was OK that Freddy staged the thing with the lights nearly falling on him and Jessica, but why on earth did Lt. Novak remain convinced that Howard did it after that? Howard was in police custody at the time. Why did Jessica not point this out?

And why was the stuff with the pillow supposed to be remotely convincing? The pillow being sun-faded in a way that exactly matches the settee in Freddy’s dressing room and in no other room works to prove that the pillow came from Freddy’s dressing room, but how on earth does that prove that Freddy murdered Al Drake? The pillow was placed there after the police investigation was over, which means that anyone could have done it. You can make an argument that the murderer would have had to use his own pillow in some sort of exigent circumstance, but not when the murderer was replacing a pillow at his leisure. The murderer would have had to be an idiot to use his own dressing room’s pillow. (Unless he was going for a double-bluff by trying to make it look like someone was trying to frame him.) If I were making a list of the top ten airtight cases, I doubt that I would include: “Somehow, long after the victim was dead, your office pillow wound up on the victim’s couch. How do you explain that, if you didn’t kill him!”

This is an especially big problem when you consider what they don’t have: a pillow with a bullet hole in it. They don’t even have anyone testifying that Al’s settee definitely had a pillow on it shortly before he was killed. In short, there’s no evidence that a pillow was involved in the murder.

And this is leaving out the fact that a pillow only makes a gun very slightly quieter. I’ve seen people test it and a gun with a pillow in front of it is is perceptibly less loud. You could definitely pick it out in blinded A/B testing. But that’s about it. It’s still around the threshold for causing hearing damage. But this is just a subset of TV silencers, which work about 1000 times better than real silencers—part of why people who know what they’re talking about tend to call them “sound suppressors” rather than “silencers”. To get an actually quiet gun which only goes “ffft” you need a specially designed silencers with multiple rubber wipers the bullet shoots through (making it require replacement after a few shots). And that only works if you use specially loaded sub-sonic bullets. Ordinary bullets, which travel much faster than the speed of sound, make a loud bang because all hypersonic objects do. Only sub-sonic bullets have the possibility of being quiet and the trade-off is that they have far less power in them. That is, they’re less likely to be lethal. This is just part of TV fantasy, though, so there really isn’t a point in complaining that TV silencers are magic, and if we’re allowing TV silencers, I suppose we need to be forgiving of TV pillows, too.

It’s really lucky that Al didn’t think of any of this and just confessed.

The importance of that confession in Murder, She Wrote is often overlooked, I think. It’s nice when the evidence is clear, but it’s absolutely crucial when it’s not. When the evidence is as flimsy as it often is, the only thing that makes Jessica look smart is the proof that she’s right which a confession offers. Otherwise she’d seem over-confident in wild guesses.

Incidentally, this is one major reason it bugs me so much when people suggest, as if it’s clever, that Jessica was wrong about who did it or committed the murders herself. The murder always confesses. Always. This is like suggesting the clever twist in Harry Potter that Harry was deathly afraid of brooms which is why you never saw him touch one! It’s brilliant! Except for the part where he did touch them, prominently, so this is stupid. Or imagine this wonderful idea where in Star Trek Kirk is really Spock, in disguise. That’s why you never see them in the same room together! Except that you see them together in the same room in every episode.

It’s really easy to be clever if you don’t let facts get in the way.

Anyway, with all that said, and not taking any of it back: it was still fun to watch this episode. A lot of that comes down to the acting. Some of it is the pacing, though. Even when not much is happening, you always feel like something is about to happen, which keeps your interest. And I think it does a decent job of making you forget all of the stuff that was never paid off or flat-out contradicted the conclusion. I also suspect that ending on a happy note for Howard and Victoria helps that. A murder investigation produces a liminal space in which normal life can’t happen. That liminal state also allows us to look into things we normally would not be able to see, which is where most of the fun of a murder mystery comes from. The resumption of normal life with something like a wedding definitively closes that liminal state—it brings us over the threshold and back into normality. It’s not required, but I suspect that it greatly helps the story to feel satisfactory. Even when it shouldn’t.

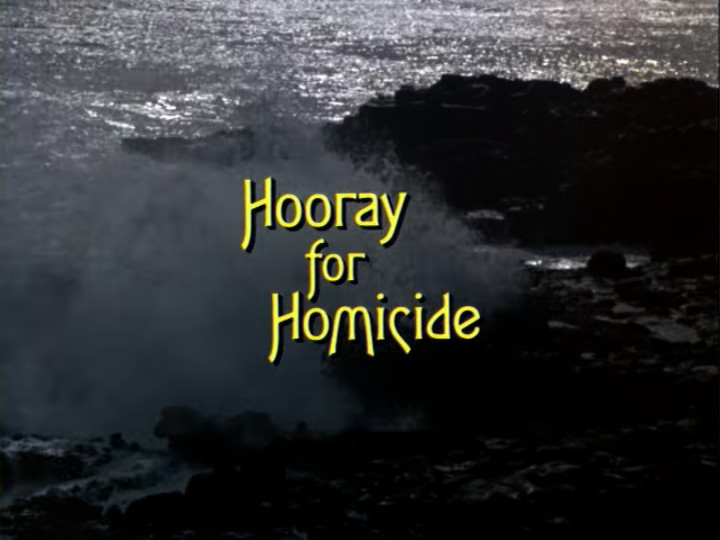

Next week we move south along the coast to Los Angeles in Hooray for Homicide.

You must be logged in to post a comment.