I wonder how many scientists would get this wrong:

You want to know if one basketball team is taller than another so you measure the players. On the one team the average is 6’5″ (15 players, standard deviations is 1.3″) while for the other it’s 6’6″ (14 players, standard deviation 1.4″). What is the p value for this 1″ difference?

I suspect that far more than zero would do what Gemini did when I presented it with this question:

The p-value for the 1-inch difference in the average heights of the two basketball teams is 0.0571.

Explanation of Statistical Test

To determine if the 1-inch difference in average height is statistically significant, a two-sample t-test was performed. This test compares the means of two independent groups to see if the difference between them is due to chance or if it’s a genuine difference in the populations from which the samples were drawn.

Here’s a breakdown of the calculation:

- Hypotheses: The null hypothesis (H_0) assumes there is no true difference in the average height of the two teams (mu_1=mu_2). The alternative hypothesis (H_a) assumes there is a difference (mu_1nemu_2).

- T-statistic: This value measures the size of the difference relative to the variation in the sample data. A larger absolute value of the t-statistic suggests a stronger difference. The calculated t-statistic for this data is -1.9894.

- Degrees of Freedom (df): This value, 26.44, is a measure of the amount of information available to estimate the population variance. It’s used to find the correct p-value from the t-distribution.

- P-value: The p-value, 0.0571, represents the probability of observing a difference in average heights of at least 1 inch (or more extreme) if the two teams were actually the same height on average. A common significance level is 0.05. Since the calculated p-value of 0.0571 is greater than 0.05, we do not have sufficient statistical evidence to reject the null hypothesis.

This means that while Team 2 is an inch taller on average in this sample, this difference is not statistically significant at a 5% level of significance. It’s plausible that the observed difference is simply due to random chance.

This is completely wrong because it’s a trick question. There is no p-value for this 1″ difference because the entire population was measured.

The p-value, used to determine whether a result is statistically significant, is, in theory, the computed probability of the result having been gotten by random chance if the null hypothesis (usually, no causative relationship or correlation existing between the two measured things) is true. However, that’s not how it’s actually used in practice.

The p-value that is used in practice is actually the probability of the results having been generated by random chance simply by how the members of each group being compared were selected from the total population.

Despite how this may sound, it’s actually a really easy concept, so let me illustrate it with an example. Suppose you want to know whether 1 beer is enough to get people drunk enough that they’re bad at playing ping pong, so you select two groups of people, each with fifty people in them, and to one group you give them the beer and to the other group you give them a non-alcoholic beer. (Let’s make favorable assumptions on all of the practical stuff, like having taken baseline measurements of their ping pong skill beforehand.)

Now, we know ahead of time that there is variation in the population in alcohol tolerance. Some people can safely operate heavy machinery after six shots of vodka and some people are under the table after one, with most people falling somewhere in between those two extremes (even apart from variations in body weight). So here’s the thing: what are the odds that instead of both groups being exactly representative of the population as a whole, your randomly assigning people from the population to one of the two groups just happened to put more alcohol-tolerant people into the 1-beer group than is representative of the whole population? The laws of probability tells us that if you run this experiment enough times, at least once you’ll randomly have all high-tolerance people in the alcoholic beer group and at least one other time you’ll have all low-tolerance people in the alcoholic beer group.

What people are measuring by p-value, in almost all scientific experiments, is how likely this kind of skew is. They are not measuring the effect of, for example, random errors in the actual measurements taken. (Conveniently for the researchers, those are always assumed to be perfect, or at least to always balance out.)

This is why the question I started this post with is a trick question: it’s got all of the information that you’d find in a random trial, presented in roughly the way you’d get it in a random trial, except there was no random selection. Within the hypothetical of the question, the entire population we care about—the two basketball teams—was measured. If you want to be super nit-picky, you can say that the p-value is zero since there was no chance of this being produced by random selection, in the same sense that the probability of a coin set down on the table with the head facing up turning up tails is zero.

But the thing is, there are scientists—evidence points to it being an awful lot of scientists—who don’t actually know this is what they’re doing when they run a p-value calculation. And, of course, there are contexts where this isn’t awful. If they’re copying a format for experiments where this happens to work, well, then, it happens to work. But because they don’t understand what it’s about, they don’t realize that the p-value is the absolute floor for how meaningless the result is. That is to say, if the scientist does a randomized (controlled) trial and gets a p-value of .045, which is below the industry threshold of .05, this means that there is a 4.5% chance that the results are meaningless if everything else is perfect.

Imperfections in instruments, fluctuations in the things to be measured, defects in the measuring tools, biases in the researchers, flaws in the study design—these all raise the chance of the results being meaningless, potentially by quite a lot.

Of course, if you want to be cynical about it, it’s in scientists’ best interests to not know any of this.

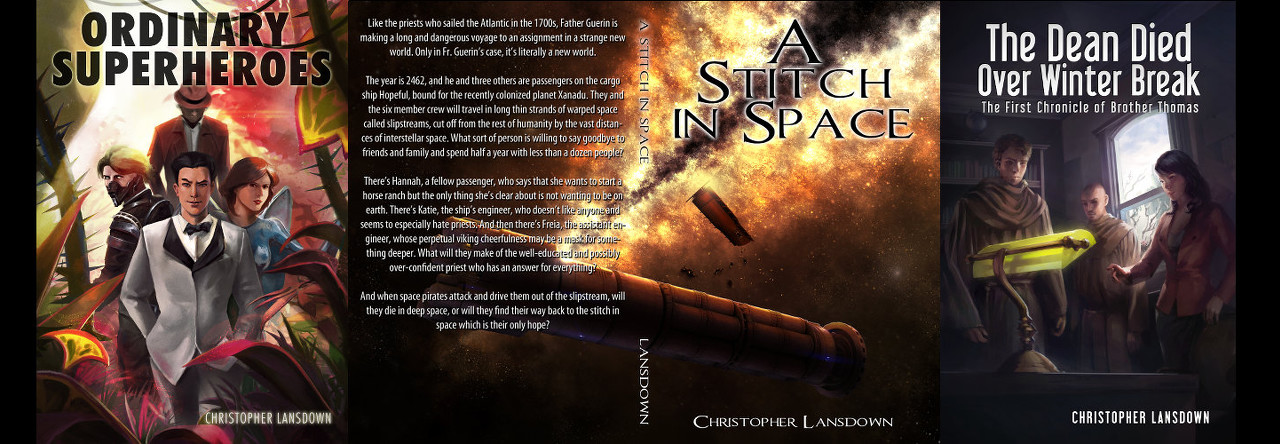

Discover more from Chris Lansdown

Subscribe to get the latest posts sent to your email.